Three scholars affiliated with University College London have posted a paper on the dangers that increasingly sophisticated algorithms pose for markets, employing a fascinating analogy from paleontology.

But we begin with their big picture. The authors are concerned that in the near future an “ecology of trading algorithms across different institutions may ‘conspire’” and bring about a result analogous to what happened with LIBOR in the scandals that came to light in 2012, but with no humans in sight to blame.

These UCL scholars are also worried that the trading algorithms may be “subject to subversion through compromised datasets.” Here their analog is Tay. For purposes of memory refreshment: Tay was an artificial-intelligence driven chatbot released by Microsoft, by way of Twitter, in March 2016. Within 16 hours, Tay was spouting racist and otherwise inflammatory messages. In due course it had to be killed off.

Microsoft has blamed trolls who it says flooded Tay over the hours after its release with deliberately offensive interactions. Tay was designed to learn from and improve with her interactions. By gaming that feature of her design, the trolls got her to incorporate their own prejudices and, to be blunt, hate.

Beyond an Incremental Upgrade

The paper, “Algorithms in Future Capital Markets,” by Adriano Koshiyama, Nick Firoozye, and Philip Treleaven, discuss why both financial institutions and regulators want to maintain a “modicum of human control” behind the algorithms, so that the markets don’t suffer from an upscaled version of those earlier fiascos.

Part of the problem is the plural—“algorithms.” There are lots of algorithms, and there are more always under development, and their relationships to each other are not necessarily obvious to the slow human brain. A single trading system might, for example, use Generative Adversarial Networks (GANs) to deal with data scarcity issues, Long-Short Term Memory (LSTMs) for trading, and Transfer Learning for knowledge sharing across markets and systems. The emergent properties of these relations are part of what may get away from us.

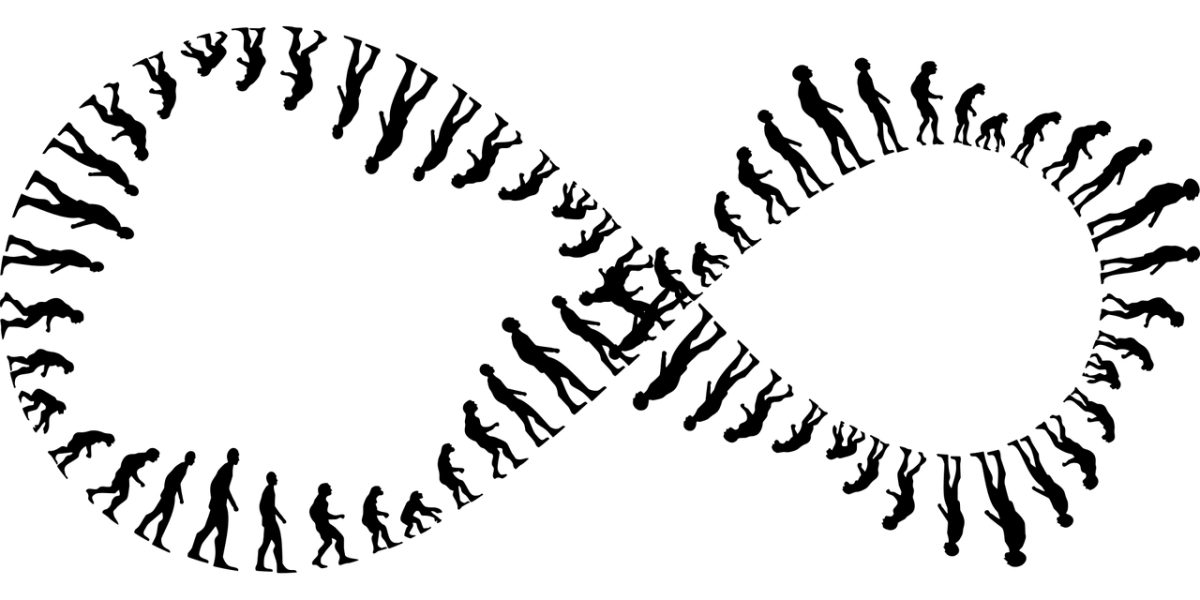

This is where we come to the paleontology. What is coming is not an “incremental upgrade,” these scholars suggest, but a sort of “Cambrian explosion.” It is worthwhile pausing over that term. The Cambrian explosion is a peculiar finding of modern biology. Most major animal phyla first appear in the fossil record within a relatively brief “window” in the Cambrian period. The window is about 20 million years wide, but it still is about 540 million years ago.

What are the authors saying with this analogy? Trading is about to be re-shaped as completely as life on earth was re-shaped at the time of that explosion of phyla. Indeed, the analogy can be taken further. The Cambrian explosion occurred as the end-product of a long gradual change in the composition of the planet’s atmosphere. Plants, and thus photosynthesis, had existed for a long time before this explosion. Given the nature of photosynthesis, the amount of free oxygen in the atmosphere had drastically increased over the eons. After some threshold was passed, the earth was primed for the emergence of a wide range of creatures who could consume all of that oxygen. The plants, in short, had created the chemical preconditions for the animals, which were thereafter going to munch on them.

Likewise, all the technological development in the worlds of stock and bond trading and alternative investment to date may be seen as the gradual build-up of oxygen in an atmosphere. It has been creating the preconditions for something completely different, as soon as some still invisible threshold is passed.

What these authors call the “main disrupting forms of [machine] learning” now observable are those that have been around for more than a decade. They correspond to pre-Cambrian life: deep learning, adversarial learning, transfer and meta learning. But the build-up of higher levels of oxygen in the atmosphere may be represented on the other side of the analogy by the ever-greater availability of data, computing power, and infrastructure.

A Final Thought

One fascinating detail in this report by is its critique of “model-agnostic explainability.” This is the trend toward creating mathematical techniques for the interpretation of decision drivers that imply nothing about the models of the underlying trading algorithm. The authors warn that this involves “running an additional model on top of an already complex mode” introducing a “layer of additional inaccuracy” and removing output “one step further … from the reality.” That is not advisable. After all, humans are trying to stay in the game, not remove ourselves further from it.