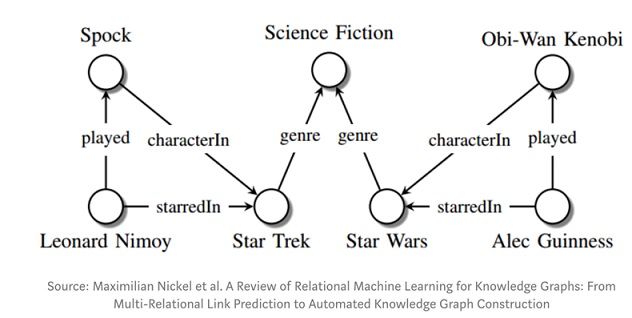

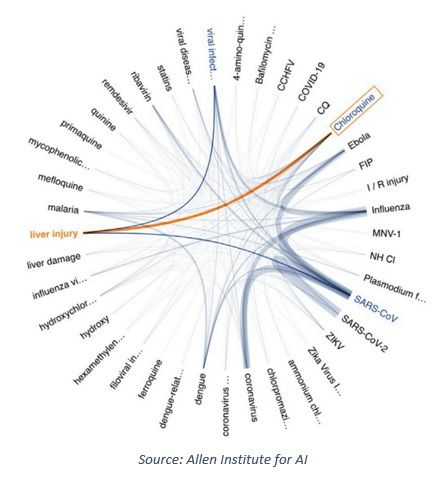

By Mehrzad Mahdavi, PhD, Executive Director, FDP Institute Imagine if your social media posts or articles were generated automatically by AI as if they were authored by you…well, that day is here! The next generation of NLP called “Generative Pre-trained Transformer, third generation”, or GPT-3, was recently released for beta tests by OpenAI. GPT-3, trained with massive amounts of data, can write stories, generate code, compose business reports, and much more. Each one of these developments can be disruptive in multiple fields. To perform such tasks, GPT-3 uses an enormous training data set, the largest ever – a filtered version of the contents of the web, plus Wikipedia and then some, containing about half a trillion words, according to a June 2020 Forbes article. The GPT-3 model uses 175 billion parameters, which is more than 100 times that of its predecessor, GPT-2, and 10 times that of Microsoft’s Turing NLG. This vast pre trained AI system enables GPT-3 to identify the patterns from millions of text snippets and synthesize them on demand in response to any questions. Example applications of GPT-3 in the financial services sector include sentiment analysis (and their inference with market signals), financial advice generation, quant strategies generation, and trading. Another new and growing field is called Knowledge Graph technology, which can be used in search engines, social networks, and e-commerce platforms to store and retrieve information with precision and speed. Knowledge Graphs contain “context” alongside raw data and, when used with AI systems, provide better transparency and interpretability of their results. Knowledge Graphs represent entities (nodes) and relations in multiple dimensions, and therefore reveal dependencies that would otherwise go unnoticed. An example diagram below shows a Knowledge Graph for the question related to Star Trek and Star Wars.  Another example of a Knowledge Graph, created using artificial intelligence, was produced by The Allen Institute for AI and Semantic Scholar (pictured below). The data set is a current body of 130,000 abstracts, plus full-text papers related to past and present coronaviruses. This graph was constructed to visualize the network of diseases and chemicals associated with ‘‘chloroquine’’.

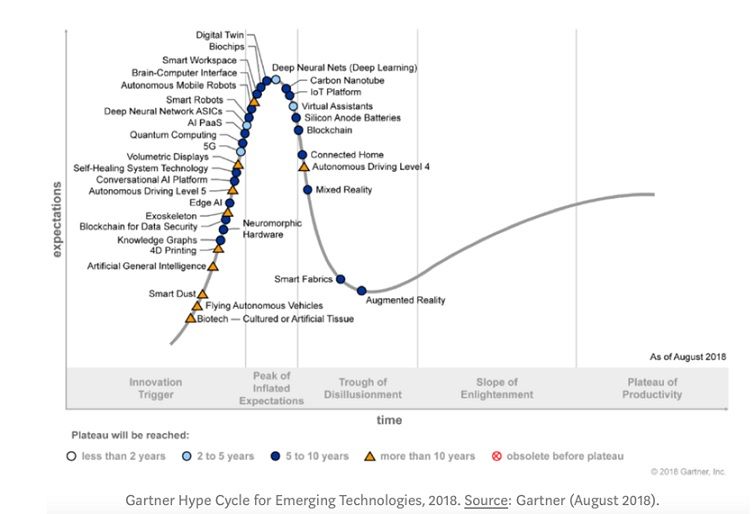

Another example of a Knowledge Graph, created using artificial intelligence, was produced by The Allen Institute for AI and Semantic Scholar (pictured below). The data set is a current body of 130,000 abstracts, plus full-text papers related to past and present coronaviruses. This graph was constructed to visualize the network of diseases and chemicals associated with ‘‘chloroquine’’.  Combining these two powerful technologies, AI (e.g. GPT-3) and Knowledge Graphs, will likely provide new capabilities in many disciplines as well as improve the accuracy and trustworthiness of AI systems. Use cases in financial services Active investing is all about identifying economic and financial relationships and uncovering hidden risks and opportunities. A combination of GPT-3 AI systems and Knowledge Graphs provide capabilities to uncover new opportunities from hidden information. Hedge funds and asset management firms are increasingly using alternative datasets released with different frequencies (daily, monthly, quarterly), formats (structured and unstructured), and even different languages. GPT-3 and Knowledge Graphs are the right combination to generate insights from such heterogeneous and dynamic data sources. Examples of current financial products leveraging AI based Knowledge Graphs for thematic investing, according to Yewno Inc, include: STOXX AI Global AI Index, Nasdaq AI & Big Data Index, AMUNDI STOXX Global Artificial Intelligence ETF (GOAI), DWS’s Artificial Intelligence & Big Data ETF (XAIX:GR), and several more. An additional application of the above technologies is portfolio risk exposure. Knowledge Graphs can identify risk factors impacting a portfolio that may otherwise go undetected when using just keywords. Key challenges and takeaways Adoption of AI and Knowledge Graphs in the financial industry is a natural next step. However, we need to separate the hype from reality. As shown in the Gartner hype cycle for AI, below, both Knowledge Graph and GPT-3 technologies should be considered in the “innovation trigger” cycle with a five- to ten-year time horizon for widespread production.

Combining these two powerful technologies, AI (e.g. GPT-3) and Knowledge Graphs, will likely provide new capabilities in many disciplines as well as improve the accuracy and trustworthiness of AI systems. Use cases in financial services Active investing is all about identifying economic and financial relationships and uncovering hidden risks and opportunities. A combination of GPT-3 AI systems and Knowledge Graphs provide capabilities to uncover new opportunities from hidden information. Hedge funds and asset management firms are increasingly using alternative datasets released with different frequencies (daily, monthly, quarterly), formats (structured and unstructured), and even different languages. GPT-3 and Knowledge Graphs are the right combination to generate insights from such heterogeneous and dynamic data sources. Examples of current financial products leveraging AI based Knowledge Graphs for thematic investing, according to Yewno Inc, include: STOXX AI Global AI Index, Nasdaq AI & Big Data Index, AMUNDI STOXX Global Artificial Intelligence ETF (GOAI), DWS’s Artificial Intelligence & Big Data ETF (XAIX:GR), and several more. An additional application of the above technologies is portfolio risk exposure. Knowledge Graphs can identify risk factors impacting a portfolio that may otherwise go undetected when using just keywords. Key challenges and takeaways Adoption of AI and Knowledge Graphs in the financial industry is a natural next step. However, we need to separate the hype from reality. As shown in the Gartner hype cycle for AI, below, both Knowledge Graph and GPT-3 technologies should be considered in the “innovation trigger” cycle with a five- to ten-year time horizon for widespread production.  As growth accelerates, we would caution against hype and premature expectations, some of which are discussed below: GPT-3 is as good as its training data set, since it uses correlations to arrive at a synthesized outcome; it does not understand the language it generates. As a result, when asked questions outside of its training ecosystem (albeit a very extensive one), its response could be unreliable. This point is further discussed in a Forbes article, July 19, 2020: “A related shortcoming stems from the fact that GPT-3 generates its output word-by-word, based on the immediately surrounding text. The consequence is that it can struggle to maintain a coherent narrative or deliver a meaningful message over more than a few paragraphs. Unlike humans, who have a persistent mental model—a point of view that endures from moment to moment, from day to day—GPT-3 is amnesiac, often wandering off confusingly after a few sentences. Put simply, the model lacks an overarching, long-term sense of meaning and purpose. This will limit its ability to generate useful language output in many contexts.” And a final thought articulated by Ganesh Mani of FDP Institute’s advisory board: “Updating the knowledge graph is a tricky but important problem, especially in high-velocity domains: COVID-19 has brought the issue in sharp focus, but we have also seen that with negative interest rates and, more recently, with negative oil prices, however fleeting. A nuanced approach and human-machine joint effort is needed to reliably update the knowledge, without which systems may exhibit significant brittleness.” References AI’s struggle to reach “understanding” and “meaning,” Ben Dickson, July 13, 2020 AI: New GPT-3 language model takes NLP to new heights Yewno Why is AI taking so long? Giving GPT-3 a Turing Test, July 2020 GPT-3 Is Amazing—And Overhyped, July 19, 2020 Artificial intelligence: The dark matter of computer vision, June 1, 2020 An AI Breaks the Writing Barrier, Aug 22, 2020 This know-it-all AI learns by reading the entire web nonstop, Sept 4, 2020, OpenAI’s new language generator GPT-3 is shockingly good—and completely mindless, July 20, 2020 Connecting the Dots: Using AI & Knowledge Graphs to Identify Investment Opportunities, March 28, 2019 Knowledge Graphs And Machine Learning -- The Future Of AI Analytics? June 26, 2019 Knowledge Graph App in 15 min, March 11, 2020 Knowledge Graph: The Perfect Complement to Machine Learning, July 18, 2019 Viral Science: Masks, Speed Bumps, and Guard Rails, Sept 11, 2020 Deep Learning’s Carbon Emissions Problem, June 17, 2020 The Chinese Room argument, by John Searle

As growth accelerates, we would caution against hype and premature expectations, some of which are discussed below: GPT-3 is as good as its training data set, since it uses correlations to arrive at a synthesized outcome; it does not understand the language it generates. As a result, when asked questions outside of its training ecosystem (albeit a very extensive one), its response could be unreliable. This point is further discussed in a Forbes article, July 19, 2020: “A related shortcoming stems from the fact that GPT-3 generates its output word-by-word, based on the immediately surrounding text. The consequence is that it can struggle to maintain a coherent narrative or deliver a meaningful message over more than a few paragraphs. Unlike humans, who have a persistent mental model—a point of view that endures from moment to moment, from day to day—GPT-3 is amnesiac, often wandering off confusingly after a few sentences. Put simply, the model lacks an overarching, long-term sense of meaning and purpose. This will limit its ability to generate useful language output in many contexts.” And a final thought articulated by Ganesh Mani of FDP Institute’s advisory board: “Updating the knowledge graph is a tricky but important problem, especially in high-velocity domains: COVID-19 has brought the issue in sharp focus, but we have also seen that with negative interest rates and, more recently, with negative oil prices, however fleeting. A nuanced approach and human-machine joint effort is needed to reliably update the knowledge, without which systems may exhibit significant brittleness.” References AI’s struggle to reach “understanding” and “meaning,” Ben Dickson, July 13, 2020 AI: New GPT-3 language model takes NLP to new heights Yewno Why is AI taking so long? Giving GPT-3 a Turing Test, July 2020 GPT-3 Is Amazing—And Overhyped, July 19, 2020 Artificial intelligence: The dark matter of computer vision, June 1, 2020 An AI Breaks the Writing Barrier, Aug 22, 2020 This know-it-all AI learns by reading the entire web nonstop, Sept 4, 2020, OpenAI’s new language generator GPT-3 is shockingly good—and completely mindless, July 20, 2020 Connecting the Dots: Using AI & Knowledge Graphs to Identify Investment Opportunities, March 28, 2019 Knowledge Graphs And Machine Learning -- The Future Of AI Analytics? June 26, 2019 Knowledge Graph App in 15 min, March 11, 2020 Knowledge Graph: The Perfect Complement to Machine Learning, July 18, 2019 Viral Science: Masks, Speed Bumps, and Guard Rails, Sept 11, 2020 Deep Learning’s Carbon Emissions Problem, June 17, 2020 The Chinese Room argument, by John Searle

Interested in contributing to Portfolio for the Future? Drop us a line at content@caia.org